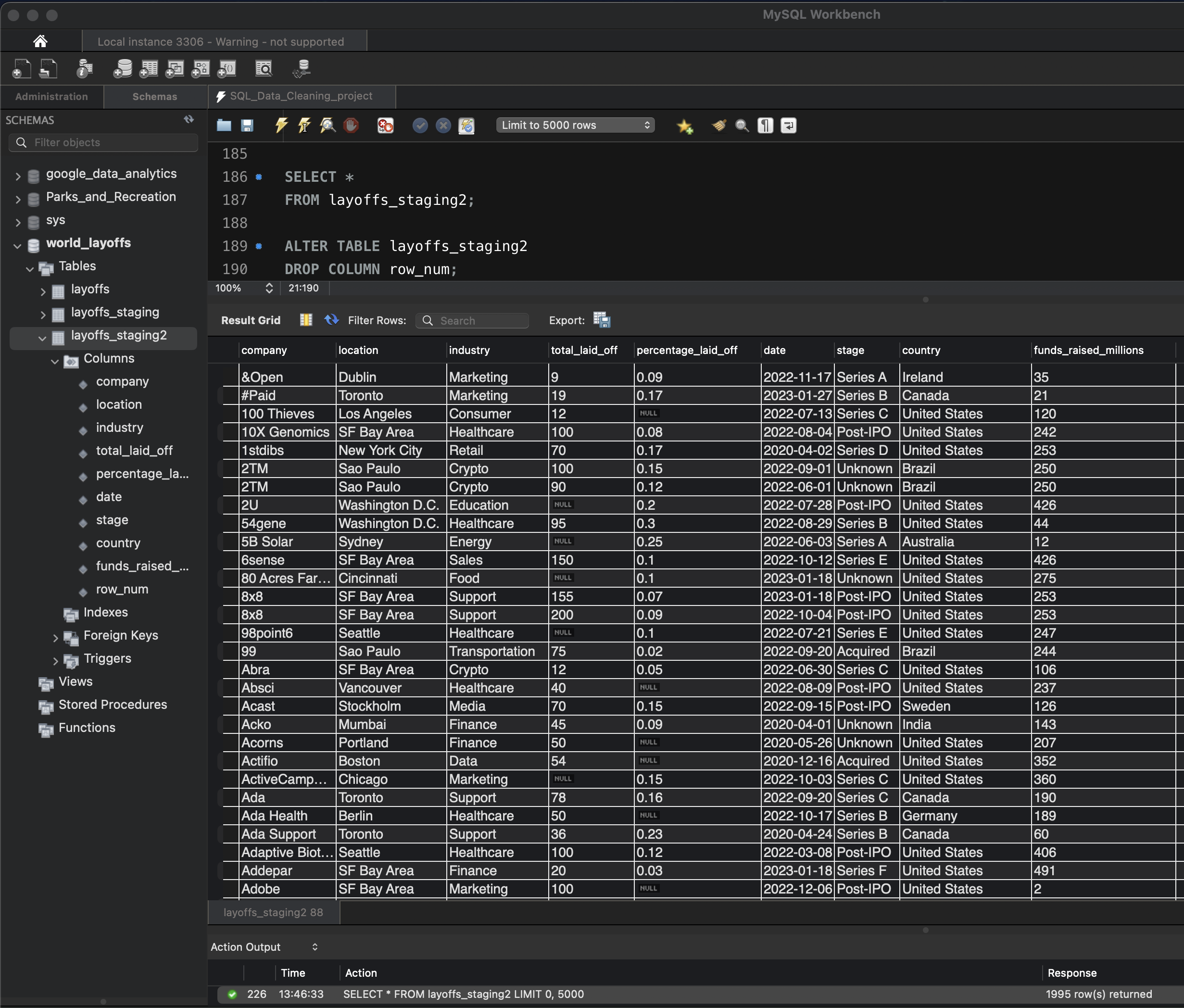

SQL Data Cleaning Project: Layoffs Data

Welcome to my SQL data cleaning project! In this project, I tackled a dataset with over 2,300 records detailing layoffs across various industries. Using MySQL, I imported, cleaned, and standardized the data to ensure its usability and accuracy. Below, you can read more about the challenges I faced, the steps I took, and the tools and techniques I used to achieve my goals.

Objective

Clean and standardize a dataset of 2,361 records detailing layoffs across various industries to ensure data quality and usability.

Challenges

Data Import Issues: Initially, MySQL only imported 540 of the 2,361 records from a .csv file. After troubleshooting, I resolved this by converting the file to .json format, which allowed all records to be imported successfully.

Steps Taken

-

Data Import:

Imported a

.jsonfile to successfully include all 2,361 records into the MySQL database. -

Data Cleaning:

- Removing Duplicates: Identified and deleted duplicate records using a

ROW_NUMBER()function with aPARTITION BYclause. - Standardizing Data:

- Trimmed unnecessary spaces from text fields.

- Standardized industry names (e.g., consolidated variations of "Crypto").

- Reformatted date values to a consistent

DATEformat usingSTR_TO_DATE.

- Handling Missing/Null Values:

- Identified and replaced missing or blank values where possible by cross-referencing other entries.

- Deleted records where critical fields (e.g.,

total_laid_off,percentage_laid_off) were missing.

- Column Optimization: Dropped unnecessary columns (

row_numafter deduplication was complete).

- Removing Duplicates: Identified and deleted duplicate records using a

- Validation: Verified data consistency and integrity through targeted queries.

Tools Used

- Database: MySQL

- File Formats:

.csvand.json

Key SQL Techniques

ROW_NUMBER()withPARTITION BYfor deduplication.- String functions (

TRIM,LIKE) for standardization. STR_TO_DATEfor date conversion.- Joins and subqueries for filling missing values.

Results

Cleaned and standardized a dataset of 2,361 records. Prepared data for further analysis and visualization.